Self Driving Cars

Uber

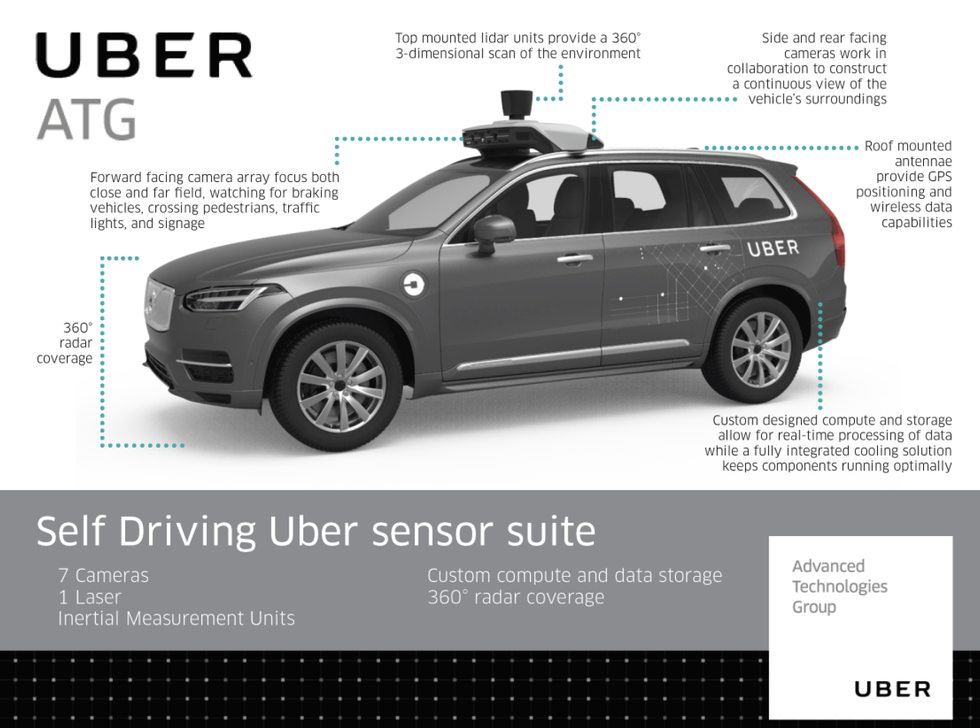

Lidar, radar and cameras: This is the tech used by autonomous Ubers to see the world around them

Investigators are working to determine why a self-driving Uber vehicle struck and killed a pedestrian

Investigators are working to determine why a self-driving Uber vehicle struck and killed a pedestrian

Self-driving cars currently being developed by Waymo, Tesla, Uber and other companies carry similar systems to help them 'see', interpret and react to the world around them.

There are some differences between each company's approach. Tesla boss Elon Musk, for example, strongly believes his cars do not need lidar, as used by Waymo and Uber — but with a shared end goal of full autonomy and no human involvement, they all work in a similar way.

Right now, these technologies find themselves under a searing spotlight, after one of Uber's autonomous test vehicles struck and killed a pedestrian as they crossed a public road in Arizona earlier this week. Had such a tragedy involved a regular human-driven car, the incident would likely have gone no further than the local Tempe newspaper.

But the reality is that 49-year-old Elaine Herzberg will likely go down in history as the first pedestrian to be killed be an autonomous car.

Joshua Brown may have been the first killed in a partially autonomous car when his Tesla, with Autopilot engaged, fatally collided with a truck crossing the road ahead of him in 2016. But an investigation by the US National Highway Traffic Safety Administration (NHTSA) found Brown had ignored several warnings by the car to take hold of the wheel. Responsible for the use of Autopilot, Brown was ultimately in control of the vehicle.

While Autopilot was then — and remains today — a form of advanced cruise control, Uber is using a fleet of test vehicles to create fully autonomous cars. The technology needs to be bulletproof, but on March 18 an Uber vehicle traveling at just 38mph failed to react to a pedestrian crossing the road ahead.

Here is a look at the technologies used by autonomous vehicles, including those operated by Uber:

First, the simplest technology. Uber's fleet of modified Volvo XC90 SUVs have an array of visible light cameras on their roofs, plus more cameras facing to the sides and behind the car.

These are used primarily to read road signs, traffic lights and the brake lights of other vehicles. Like our own eyes, they are good at spotting colors and reading signs. A wide range of cars currently on sale use similar cameras to inform the driver of the current speed limit, and are used as a basic form of object detection.

The cameras feed their footage to an onboard computer which employs advanced algorithms to paint a picture of the vehicle's environment.

These computers analyze the camera's video feed in real time to work out what is going on; they are smart enough to spot different types of vehicles, differentiate the sky from the road ahead, and identify pedestrians at the side of the road.

Anyone who has driven a modern car with automatic emergency braking and pedestrian detection systems will be aware of how this technology works. They will also likely have experienced occasions when a false positive has caused the car to beep loudly and flash warnings at the driver.

In my own experience, I have seen false positives triggered when driving around a car parked at the side of the road, as the system thinks I should be following the stationary vehicle, so warns me when it thinks I should be stopping behind it. I have also had alerts when a pedestrian walks halfway across the road and waits in the middle for me to pass; here, the system has identified a person and issues a warning because it isn't aware of the eye contact they have made with me, or the exact details of the situation.

Uber describes the cameras fitted to it Volvos as a "forward facing camera array [which is used to] focus both close and far field, watching for braking vehicles, crossing pedestrians, traffic lights, and signage."

At night, visible light cameras — much like our own eyes — are less reliable and send a less detailed picture to the car's computer. Thankfully, unlike our eyes, autonomous cars like those developed by Uber, have two more vision systems which work equally well in light and dark.

Radar systems emit radio waves then build a picture of the environment based on the pattern of the waves as they bounce back. Autonomous cars used by a range of manufacturers, including Uber, use radar.

Radar is also used by many cars currently on sale; the technology is employed by adaptive cruise control systems which maintain a safe distance from the vehicle ahead by automatically matching their speed.

Unlike cameras, the amount of light makes no difference at all to how a radar works, although adverse weather conditions like heavy snow and thick fog can limit the quality of the images it produces.

According to Uber, its Volvo XC90s make use of "360-degree radar coverage."

Used by cruise control systems for many years, radar works best when identifying larger objects like buildings and other vehicles. It is not as good at detecting small, softer objects like pedestrians and cyclists. To help fill these gaps, autonomous cars — unlike vehicles currently on sale — use lidar.

Lidar works in a similar way to radar, but instead of emitting radio waves it uses infrared light.

An autonomous car's lidar system often looks like a spinning cylinder and is usually fitted to the roof, where it can see in every direction. The lidar system rotates quickly, capturing 3D images of the car's surroundings many times each second. Like how radar bounces radio waves off the environment, lidar does the same with infrared lasers.

The most advanced lidar systems can emit invisible light millions of times per second, building up an incredibly detailed image which the car's computer can then use to interpret what's going on and predict what will happen next. The latest system developed by lidar company Velodyne, the VLS-128, uses 128 laser beams fired to a distance of 300 meters. As the system spins through 360 degrees, a 3D image is created - and updated with up to four million new data points every second.

It isn't know what lidar system Uber uses. The company says its Volvos have "top mounted lidar units [which] provide a 360-degree, three dimensional scan of the environment."

Like radar, heavy fog and snow can limit a lidar system's ability to see long distances and in as much detail, and its accuracy decreases with range.

As for Musk's reluctance to use lidar on Teslas, he said on an earnings call in February this year: "In my view, it's a crutch that will drive companies to a local maximum that they will find very hard to get out of. Perhaps I am wrong, and I will look like a fool. But I am quite certain that I am not."

Musk believes cameras, with their increasing abilities and lower prices, are the future of autonomous driving; not lidar. Musk also claims Tesla's computing power and sophisticated "neural net" will "see through" fog, rain, snow and dust in a way he believes lidar cannot.

In a promotional video featuring its self-driving technology, above, Uber says its lidar and radar systems "scan the environment and make sure the vehicle is aware of everything around it, like that stop sign up ahead, that woman crossing the street, and the cyclist coming up behind them."

Uber also says how its safety drivers and vehicles are trained to "expect the unexpected, like swinging car doors, pedestrians, ands unusual roadways."

'Difficult to avoid this collision'

Footage released by the Tempe police department shows the seconds leading up to the collision in Arizona. But while it seems clear that a human would have struggled to see Herzberg until it was too late, this doesn't mean the car's radar and lidar systems were caught equally unaware.

Indeed, the above video shows how its Volvo can 'see' pedestrians crossing the road ahead in the same way it can spot other vehicles.

Lidar's ability to see as clearly at night as during the day also raises questions over comments made by Tempe police chief Sylvia Moir, who said a day after the incident: "It's very clear it would have been difficult to avoid this collision in any kind of mode [autonomous or human-driven] based on how [Herzberg] came from the shadows right into the roadway."

It is unknown what experience Moir has in relation to lidar systems and how autonomous vehicles work. While it is true that a pedestrian emerging from a shadow, as Herzberg, did would be tricky for a human to see and react to in time, lidar works very differently to the human eye.

What investigators will no doubt be concentrating on is what the car itself was able to see — not what a regular camera saw, but what the car's radar and lidar picked up from their constant scanning in every direction.

It will now be the job of the police, Uber and the US National Transportation Safety Board (NTSB) to work out what happened, what didn't happen, and who is responsible.

GearBrain Compatibility Find Engine

A pioneering recommendation platform where you can research,

discover, buy, and learn how to connect and optimize smart devices.

Join our community! Ask and answer questions about smart devices and save yours in My Gear.