Voice commands are dangerously insecure: These researchers have a plan to fix them

Prototype device matches voice vibrations with what our devices hear us say

Prototype device matches voice vibrations with what our devices hear us say

We're using our voices to interact with technology more than ever before. From Siri and Alexa, to our car's satellite navigation system, smart home security, and even for logging into our bank's supposedly secure automated phone system.

But until now, researchers say, not enough has been done to make sure our voices are secure. They can be mimicked too easily, by a computer or another human, and damage can be done all too easily by speaking to an unsuspecting and insecure artificial intelligence.

This is where a team of researchers from the University of Michigan believe they can help. Led by Kang Shin, a professor of computer science and electrical engineering, they have created a device which matches the sound heard by a computer with the vibrations our voice creates on our ears and neck.

Shin says: "Increasingly, voice is being used as a security feature but it actually has huge holes in it. If a system is using only your voice signature, it can be very dangerous. We believe you have to have a second channel to authenticate the owner of the voice."

While authenticating your requests for Alexa to play some music or set a timer might seem unnecessary, such assistants can be used maliciously. If configured to wake with the 'hey Siri' command, a victim's unattended iPhone could be commanded by a nearby attacker to send offensive or potentially damaging text messages.

Hacking into a voice system could also have financial consequences. Earlier this year, a BBC reporter and his twin were able to fool the automated phone banking system of HSBC, letting one brother log in to the other's account.

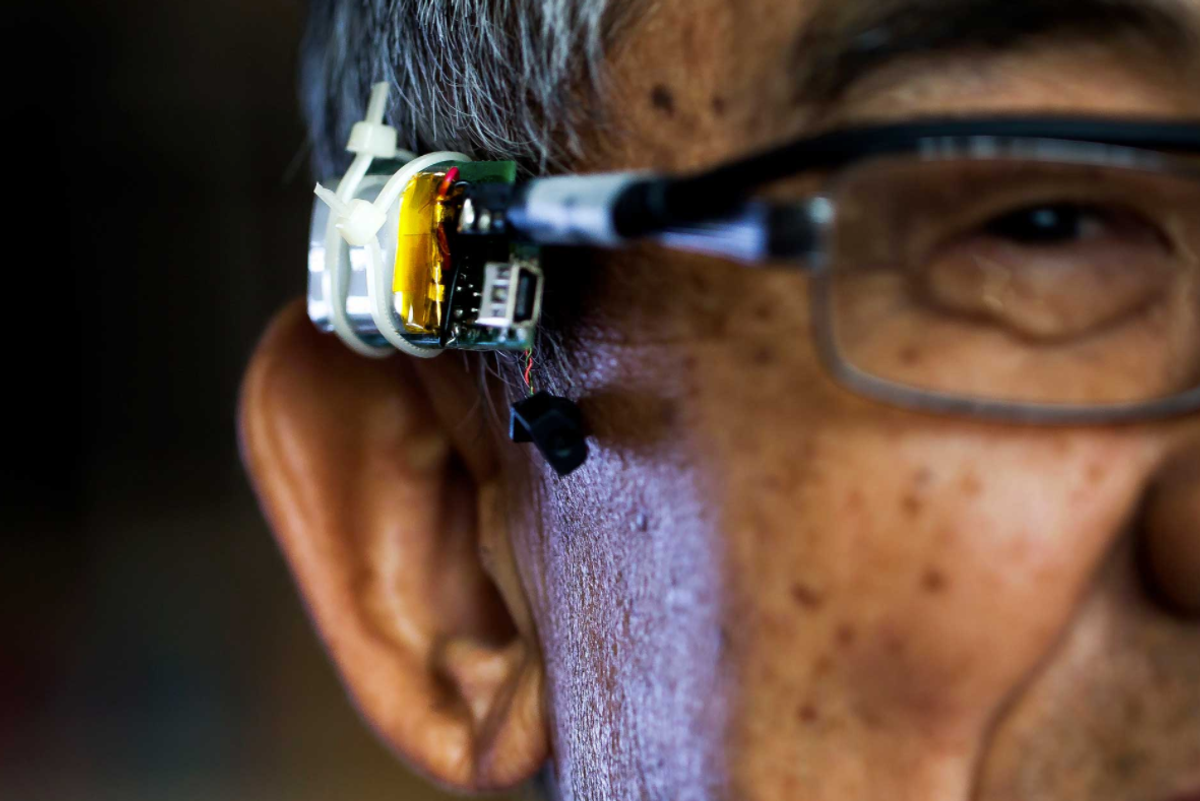

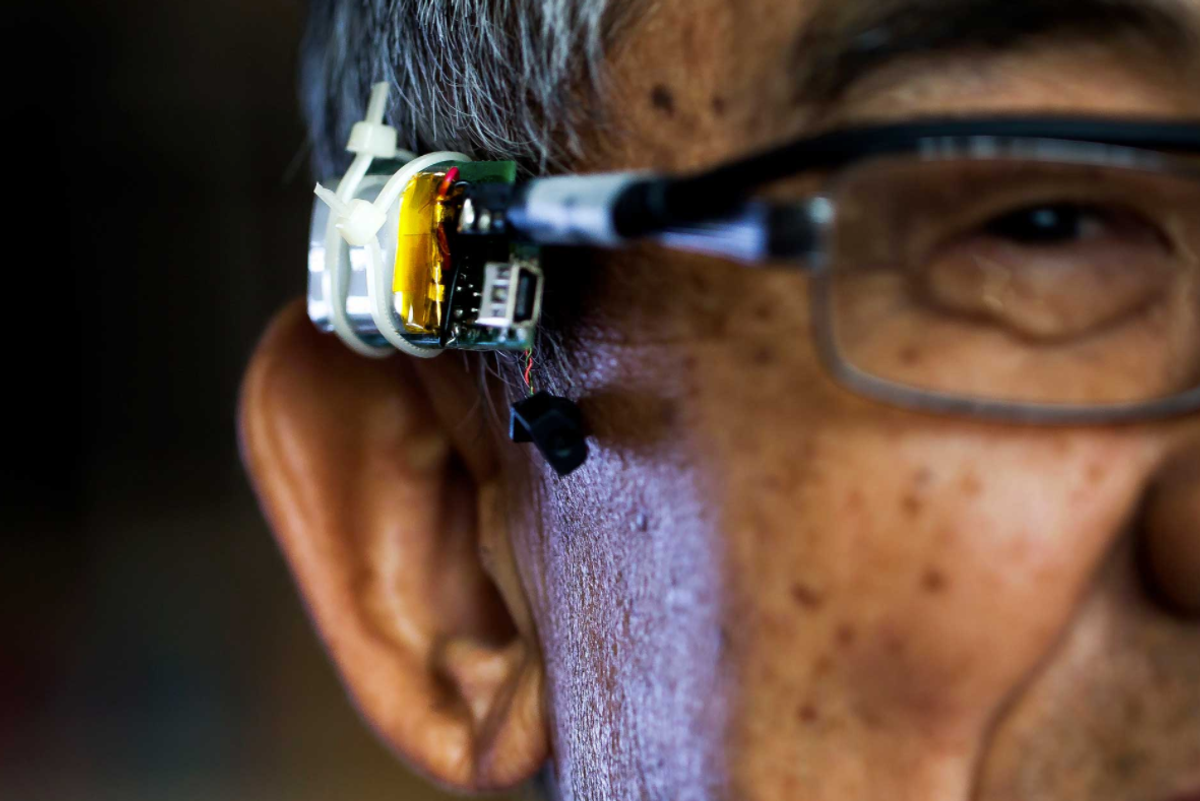

A prototype device created by the team at University of Michigan attaches to a pair of glasses and rests just above the wearer's ear. The device uses an off-the-shelf accelerometer to measure motion and a Bluetooth transmitter to communicate with whatever device the user is speaking to. They have also created software to make the device work with voice-activated Google Now commands.

The device records vibrations created when the wearer speaks, then matches them to what the target device — such as a smartphone or a speaker like the Amazon Echo — hears. Called VAuth, Shin says the system is "the first attempt to secure this service, ensuring that your voice assistant will only listen to your commands instead of others.

"It delivers physical security, which is difficult to compromise even by sophisticated attackers. Only with this guarantee can the voice assistant be trusted as personal and secure, especially in scenarios such as banking or home safety."

After being tested with 18 users and 30 voice commands, the system achieved a 97 percent detection accuracy, and only returned a false positive - potentially letting an attacker through the victim's banking security — 0.1 percent of the time.

And while some voice systems can be trained to only respond to commands from their owner, this is still an insecure system, says Kassem Fawaz, who also worked on the project. "A voice biometric, similar to a fingerprint, is not easy to keep protected. From a few recordings of the user's voice, an attacker can impersonate the user by generating a matching 'voice print.' The users can do little to regain their security as they cannot simply change their voice."

GearBrain Compatibility Find Engine

A pioneering recommendation platform where you can research,

discover, buy, and learn how to connect and optimize smart devices.

Join our community! Ask and answer questions about smart devices and save yours in My Gear.